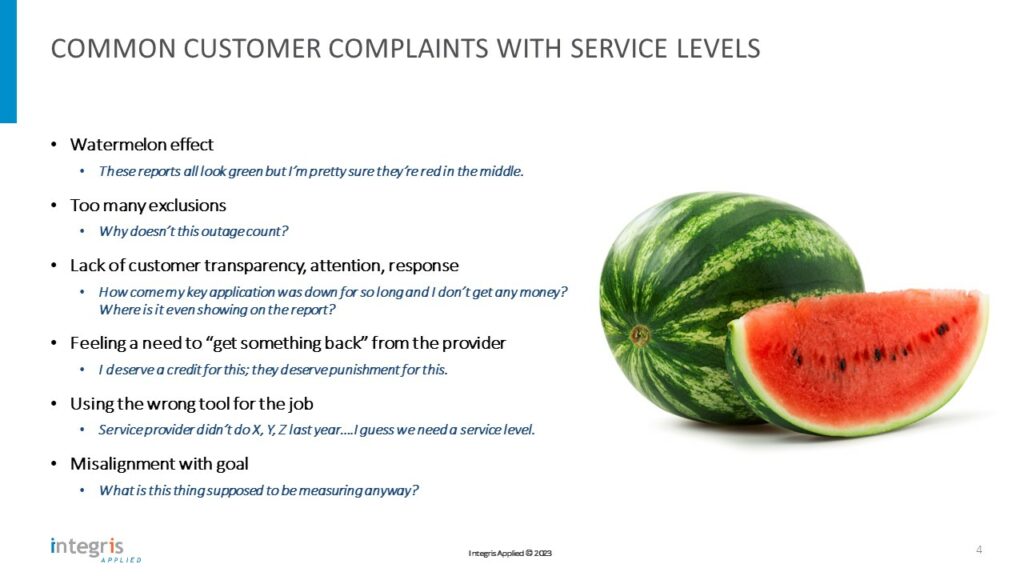

In a world where consumers are routinely asked to rate experiences on a 1-5 scale – seemingly multiple times per day – many firms still grapple with establishing, reporting, managing, and communicating service level metrics effectively. Despite years of accumulated wisdom in IT service delivery and contracting, numerous service management leaders find themselves addressing the same customer complaints. These customer frustrations are not without basis; too frequently, IT delivery teams concentrate solely on internal operations or managing a service provider, neglecting the crucial perspectives of their customers. This article offers actionable advice to help leaders navigate these challenges more effectively.

1.) Recognize that Service Level Metrics are needed for more than just managing internal performance or external contractors.

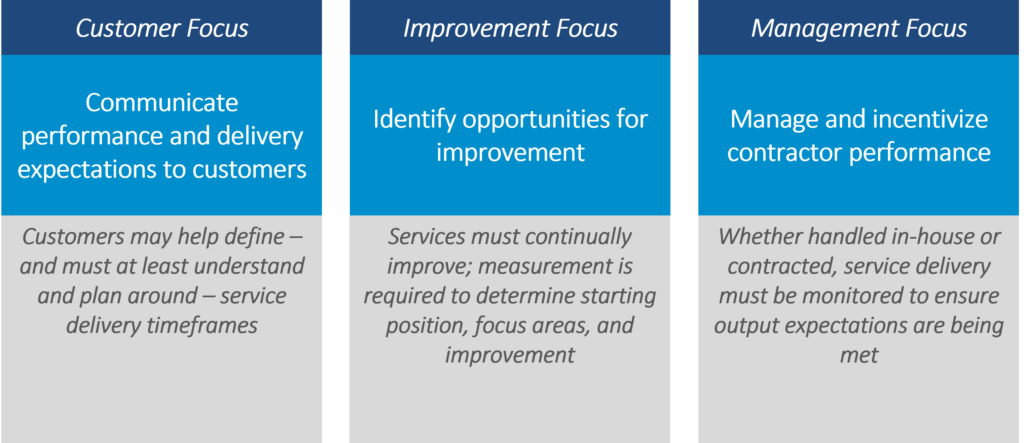

Many service levels and key performance indicators came from service provider contracts. However, we frequently remind clients that they must manage and report service level performance for numerous reasons, including:

- Customer Expectations: Communicating performance and delivery expectations to customers (which could be end users, business units, agencies, or other constituents) allows them to understand and plan around their IT services. Keeping customers informed of the current performance will also help engage conversation for them to advise when changes may be needed to support their business goals.

- Baselining and Continual Improvement: When endeavoring on transformational programs, it is important to know the current performance. Customers and other stakeholders may express concerns or complain any time their experience changes, and the central IT department should be able to track and communicate whether they are maintaining or improving performance.

- Managing and Incentivizing Performance: This is the traditional purpose of service levels, and whether services are handled in-house or contracted, service delivery must be monitored to ensure that expectations are being met.

2.) Set metrics based on user experience – not based on the internal piece-parts of a solution.

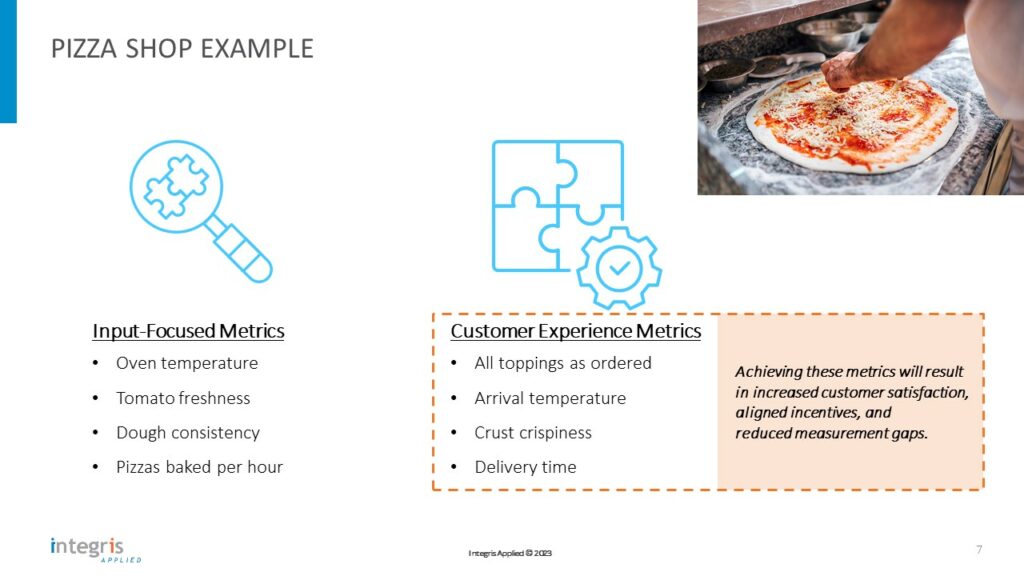

Focusing on service outcomes has multiple benefits such as increasing customer satisfaction, aligning service delivery incentives, and minimizing measurement gaps. A typical issue in service level management is choosing metrics like “Internet circuit uptime,” “server availability,” or “calls handled per help desk agent.” Although these metrics are needed for internal management, they fall short in effectively conveying the full picture to the customer. For instance, a server might be online, but if the logon service is down, it would prevent users from accessing the application.

To further explain this concept, it can be beneficial to consider a non-IT example. Suppose a pizzeria decides to establish service level metrics for customer. It might opt for metrics such as “tomato freshness,” “dough consistency,” and “pizzas baked per hour.” However, these factors, while important for internal operations, are not what the customer primarily cares about. Instead, a customer is likely to evaluate the pizzeria based on factors such as “delivery time,” “temperature upon arrival,” and “accuracy of toppings.” Prioritizing these customer-centric metrics helps align the pizzeria staff’s efforts towards enhancing customer satisfaction. Simultaneously, they can continue to internally monitor ingredient freshness, staff performance, etc., to ensure the delivery of the desired customer experience.

3.) Start where you are – you can set performance metrics any time based on internal data; don’t look for “industry standards” to solve it.

Clients often presume that the absence of effective service level metrics in their existing contracts, or the lack of readily available key data, precludes them from setting metrics. When they do attempt to establish metrics, they frequently resort to industry-standard data or customer expectations to identify targets. We assert that neither of these methods tend to yield satisfactory results.

First, most industry-standard data focuses on individual components of a solution rather than encompassing the entire customer experience (as recommended in suggestion #2 above). For instance, certain types of Internet circuits in a specific region may demonstrate consistent average uptime. However, the actual customer experience depends on the application’s usability across that Internet circuit, necessitating effective management of the desktop environment, network connectivity, servers, and more. When all these components are aggregated into a managed service, the resulting customer experience uptimes can greatly vary among different firms.

Moreover, when customers (e.g., end users, business units) are asked to state their own uptime expectations without understanding the current performance, their responses often include vague terms like “better”, “no downtime during the day”, or “one-hour resolution”. Such responses are not conducive to creating a change program, as the IT delivery team needs a clear understanding of their current performance to determine if cost or work is required to improve.

Establishing current performance may actually be simpler than many clients believe. They typically possess data from IT service management tools, help desk records, and the like. By reviewing this data over a period of 6-12 months, an average performance can be calculated to establish an initial baseline. This provides a more grounded starting point for setting and managing service level metrics.

4.) Communicate openly.

Although it may be scary at first, we strongly advocate for central IT teams to report and communicate their service level performance regularly to customers. This can be achieved through a variety of channels, each with its own level of engagement.

At simplest, teams can maintain an internal website where all relevant metrics are updated and displayed. This passive approach ensures that data is readily accessible to those who seek it, fostering a culture of transparency and accountability. For more active engagement, central IT teams can share these metrics in monthly governance meetings or newsletters. This not only keeps the dialogue about performance ongoing but also allows for immediate feedback and course correction if required.

Frankly, many customers may not actively monitor these metrics – but the act of making them available instills confidence in the internal IT team. This openness signals a commitment to transparency and continual improvement, fostering trust in the internal delivery team.

Moreover, the regular reporting of service level performance provides a baseline historical reference. This allows the IT team to track their progress over time and demonstrates their efforts towards maintaining – or ideally improving – service performance. By documenting and showcasing these performance reports, IT teams can highlight their achievements, learn from past challenges, and make data-informed decisions for future improvements.

Additional Principles

Additional Principles

In addition to the above, we recommend clients keep the following in mind when setting service level targets:

- Measure what you’re buying: Many clients want to purchase a performance-based service contract, but fall into the trap of pricing or measuring inputs rather than outputs. This mixes up incentives, reduces value, and results in disputes.

- Use the right tool for the job: Service level metrics are typically monthly, operational performance measures for enterprise contract management; they cannot solve everything that might need to be reported or managed.

- Move the spotlight: Expectations and performance needs may change over time, and therefore management focus may need to change. If everything is reporting green, move attention to a different area or metric.

- Don’t dilute the remedy: Some clients set too many service level metrics in their contracts or internal reporting, which limit focus and dilute the remedies. Keep the metrics focused on the most important and representative areas.

- Trust but verify: Have contractors report summary performance on a regular basis, but also insist they provide underlying detail. Periodically validate the calculations.

- Establish the cadence: Identify and schedule activities that should occur on a monthly, quarterly, or annual basis in managing and improving performance. These activities include report review, data validation, re-visiting processes, retiring existing or establishing new metrics, etc.

- Get better over time: All service delivery should continually improve over time, whether managed in-house or contracted out. Clients would not accept performance today at standards from 10 years ago; likewise when entering into new contracts, clients should demand that performance standards increase in the years to come. Internally, they should establish an ongoing reporting and continual improvement program.

We would look forward to assisting you directly. If you would like to talk through your situation, please feel free to contact us.

Download this article as a white paper here.

– Tim Ryckman, May 2023 – [bio]